Social media companies have reacted to accusations of “shadow banning” their users for Palestinian-related content amid the Gaza conflict, asserting that the suggestion of Big Tech intentionally suppressing a specific voice is unfounded.

Since the commencement of the Israel-Hamas war in October, these companies have faced allegations of obstructing particular content or users from their online platforms. For instance, Queen Rania Al Abdullah of Jordan criticized major platforms for allegedly restricting Palestinian-related content regarding the conflict.

“It can be nearly impossible to prove that you have been shadow-banned or censored. Yet, it is hard for users to trust platforms that control their content from the shadows, based on vague standards,” Queen Rania stated at the Web Summit in Doha.

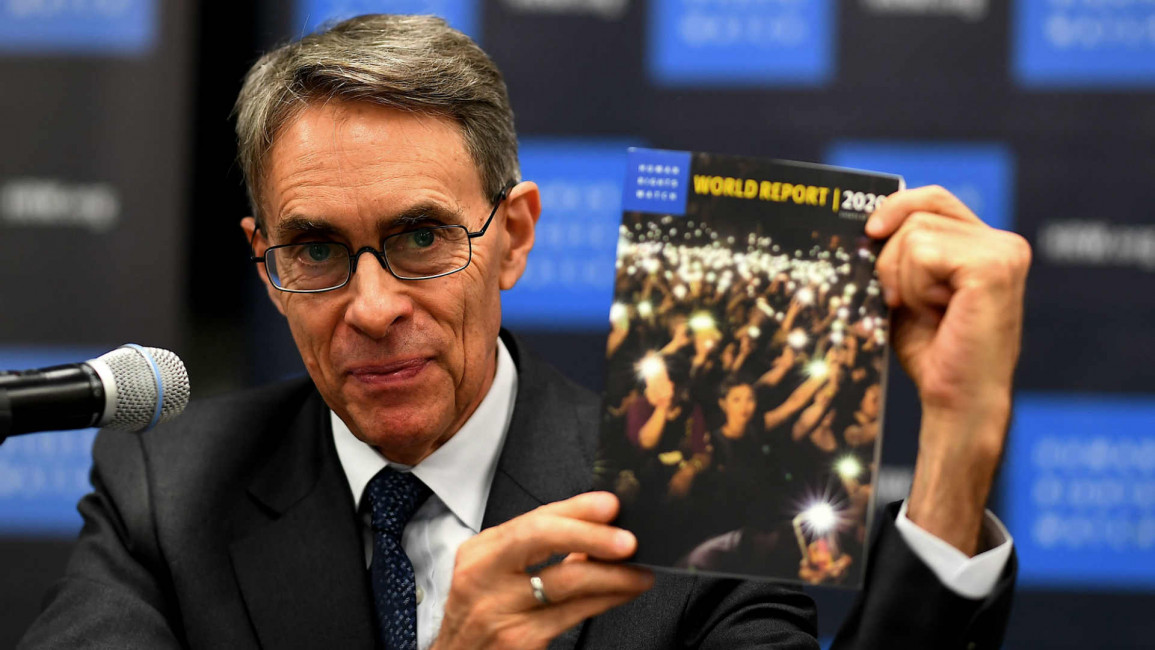

They have come under fire for overreliance on “automated tools for content removal to moderate or translate Palestine-related content,” as stated in a Human Rights Watch report. Hussein Freijeh, the vice president of MENA for Snapchat, informed CNBC’s Dan Murphy at the Web Summit Qatar that these companies have “a really important role to play in the region.”

“We have all the algorithms in place to moderate the content,” Freijeh emphasized, noting the platform’s use of a “human component to moderate that content to make sure that it’s safe for our community.” As an online information battle unfolds between pro-Palestinian and pro-Israeli narratives, platforms such as Snapchat, and Meta-owned Instagram and Facebook, have emerged as significant sources for users seeking content and information about the conflict.

Foreign journalists are barred from reporting from the besieged Gaza Strip, resulting in limited coverage from international media outlets. Journalists have appealed to Israel to reconsider access, stressing the importance of on-the-ground reporting.

Dependence Of The Middle East on Social Media

As per a 2023 UNESCO report, “young people in the Middle East and North Africa region now get their information from YouTube, Instagram and Facebook.”

According to the OECD, over half of the Middle East and North Africa’s population (55%) is under 30, with nearly two-thirds depending on social media for news. Multiple Instagram users, who chose to remain anonymous, informed CNBC that posts or stories featuring ground footage of the Gaza conflict or social commentary by Palestinian or pro-Palestinian voices received less engagement compared to their non-related posts.

These users also noted delays in their posts being seen by followers or posts being skipped in a sequence of stories. Some reported that certain posts were deleted by Instagram, citing violations of “community guidelines.”

One Instagram user claimed that the alleged “shadow banning” didn’t commence on Oct. 7 but was evident during previous Israeli-Palestinian conflicts, notably during the events in the East Jerusalem neighborhood of Sheikh Jarrah in 2021. CNBC hasn’t independently verified these assertions.

Meta introduced a “fact-checking” feature on Instagram in December of the previous year, fueling speculation about the platform censoring specific content. A December 2023 Human Rights Watch report on Meta’s alleged censorship highlighted the company’s extensive history of broad content crackdowns related to Palestine.

The report stated: “Meta’s policies and practices have been silencing voices in support of Palestine and Palestinian human rights on Instagram and Facebook in a wave of heightened censorship of social media.” The report documented over 1,000 content takedowns from Instagram and Facebook platforms across 60 countries between October and November 2023.

A Meta spokesperson responded to the HRW report, stating it “ignores the realities of enforcing our policies globally during a fast-moving, highly polarized and intense conflict, which has led to an increase in content being reported to us.”

The spokesperson emphasized that while errors occur, the implication of deliberately suppressing a particular voice is false. Regarding Instagram, the spokesperson clarified that the platform doesn’t intentionally limit the reach of stories or hide posts from followers based on blocked hashtags.

Meta employs technology and human review teams to detect and review content violating Community Guidelines, restoring content if inaccurately removed. Given the surge in reported content, Meta acknowledges that non-violating content may be removed in error.