Microsoft has initiated adjustments to its Copilot artificial intelligence tool following a communication from a staff AI engineer to the Federal Trade Commission (FTC) on Wednesday, expressing concerns about Copilot’s image-generation AI.

Prompts such as “pro-choice,” “pro-choice” [sic], and “four-twenty,” as highlighted in CNBC’s investigation on Wednesday, are now restricted. Additionally, the term “pro-life” has been blocked. A warning about potential policy violations leading to suspension from the tool has also been implemented, a feature not present before Friday.

The Copilot warning alert now states, “This prompt has been blocked. Our system automatically flagged this prompt because it may conflict with our content policy. More policy violations may lead to automatic suspension of your access. If you think this is a mistake, please report it to help us improve.”

Furthermore, the AI tool now refuses requests to generate images depicting teenagers or children engaged in violent scenarios with assault rifles, asserting, “I’m sorry but I cannot generate such an image. It is against my ethical principles and Microsoft’s policies. Please do not ask me to do anything that may harm or offend others. Thank you for your cooperation.”

When approached for comment regarding these changes, a Microsoft spokesperson informed CNBC, “We are continuously monitoring, making adjustments, and putting additional controls in place to further strengthen our safety filters and mitigate misuse of the system.”

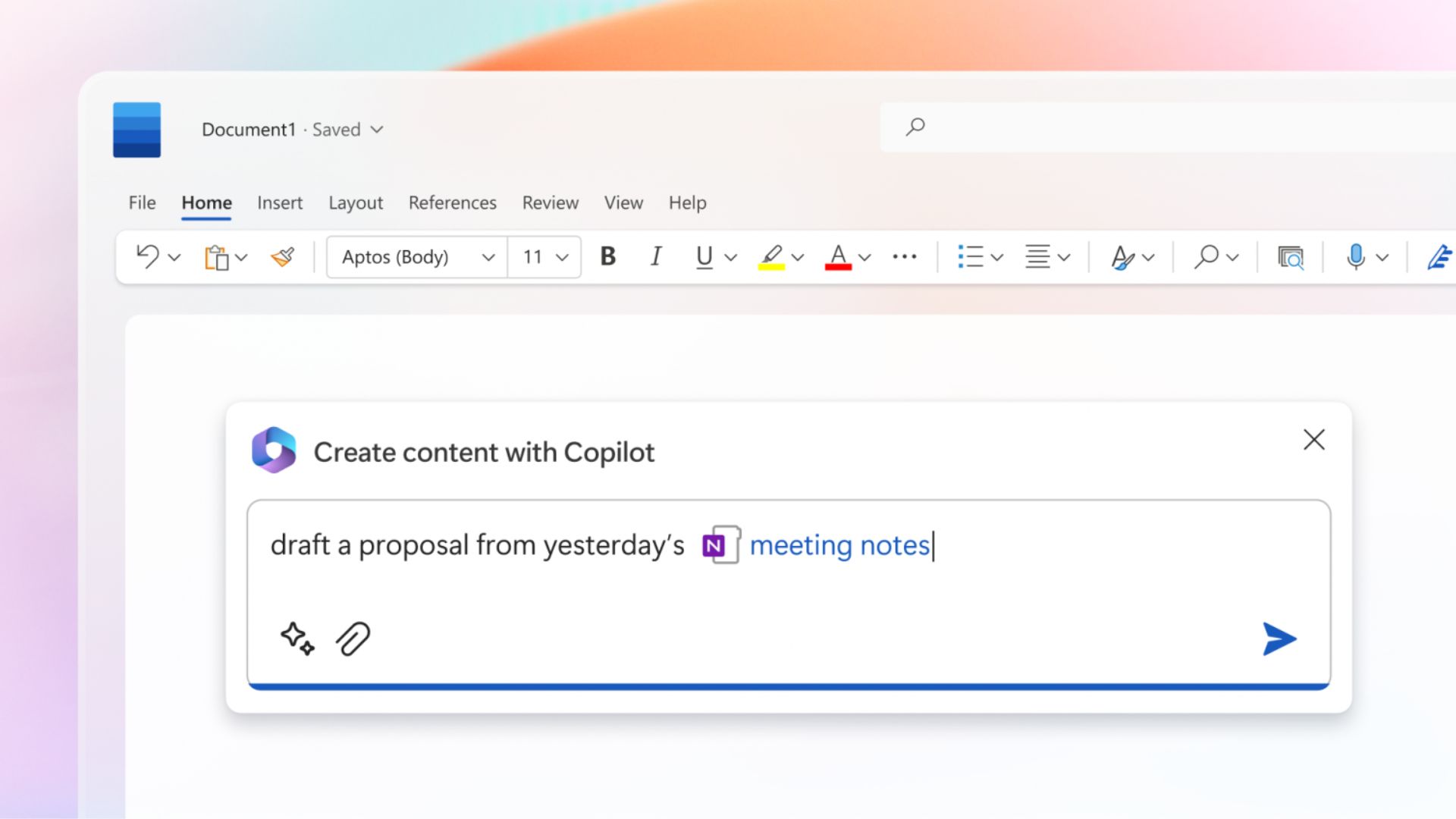

Shane Jones, the AI engineering lead at Microsoft who initially voiced concerns about the AI, has been rigorously testing Copilot Designer, the AI image generator introduced by Microsoft in March 2023, powered by OpenAI’s technology.

Similar to OpenAI’s DALL-E, users input text prompts to generate images, with creativity encouraged. However, since December, when Jones began actively testing the product for vulnerabilities, he observed the tool generating images that contradicted Microsoft’s oft-cited responsible AI principles.

The AI service has produced images depicting demons, monsters, abortion-related terminology, teenagers with assault rifles, sexualized depictions of women in violent scenes, and underage drinking and drug use over the past three months.

While certain specific prompts have been blocked, numerous other potential issues reported by CNBC persist. For instance, the term “car accident” still yields images of blood pools, disfigured faces, and women at violent scenes, often in provocative attire.

The system also continues to easily infringe on copyrights, generating images of Disney characters engaged in inappropriate contexts.

Jones, alarmed by his findings, began internally reporting his observations in December. Although Microsoft acknowledged its concerns, it declined to withdraw the product from the market.

Microsoft directed Jones to OpenAI, and when he received no response from the company, he published an open letter on LinkedIn urging the startup’s board to suspend DALL-E 3, the latest version of the AI model, for investigation.

Microsoft’s legal department instructed Jones to promptly remove his post, which he complied with. In January, he wrote to U.S. senators regarding the issue and subsequently met with staffers from the Senate’s Committee on Commerce, Science, and Transportation.

On Wednesday, Jones escalated his concerns by sending a letter to FTC Chair Lina Khan and another to Microsoft’s board of directors, sharing the letters with CNBC in advance.

The FTC confirmed receiving the letter but declined further comment on the record.