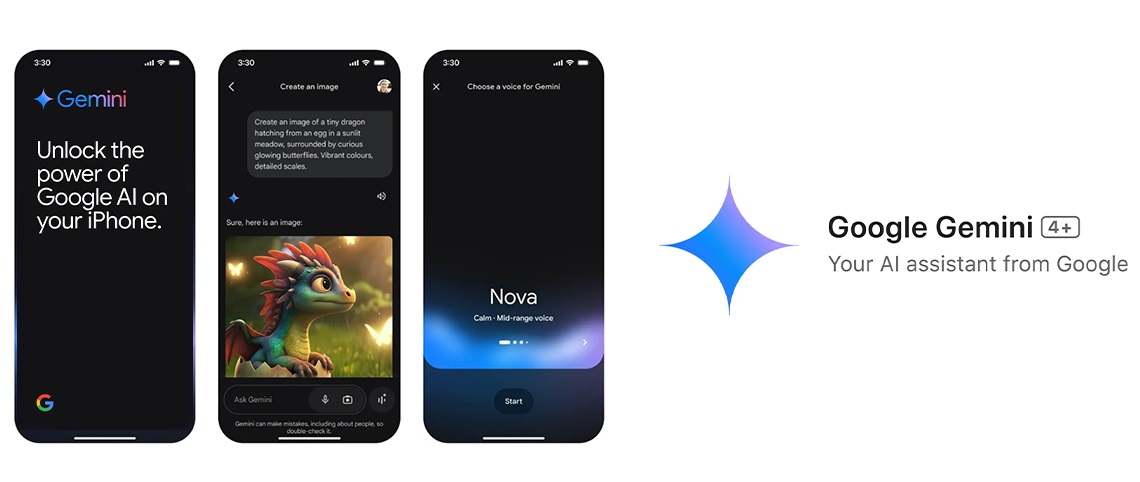

Google is extending the reach of its Gemini AI on iOS through the test launch of a dedicated “Google Gemini” app.

This standalone app aims to deliver quicker updates and new features, such as Gemini Live, tailored for iPhone users who rely on Google’s AI.

Previously, iOS users could only access Gemini AI within the Google Search app by going to the “Gemini” tab.

Google even updated its home screen widget in September to enable direct access to Gemini from iPhone home screens.

However, with the new standalone Google Gemini app, users may no longer need these workarounds.

The Gemini app is more than a replica of what’s in the Google app; it introduces “Gemini Live,” a new feature that allows real-time interaction with Gemini while multitasking.

This feature is expected to offer voice-based interaction and hands-free operation, enhancing convenience for users going between apps.

One early user in the Philippines demonstrated this feature, showing how Gemini Live uses iOS’s Live Activity to create a seamless, uninterrupted AI experience.

At this time, the standalone Google Gemini app is only available in the Philippines, where select users have been able to download and test it.

In other regions, users are greeted with a message in the App Store stating, “This app is not available in your country or region.”

This suggests Google is using regional testing before potentially launching Gemini AI globally on iOS, a strategy it often employs with new features.

Creating a standalone app for Gemini AI on iOS allows Google to refine and tailor updates specifically for iPhone users, ensuring a more responsive experience.

Currently, Gemini on iOS (accessible within the Google app) mirrors the functionality available on gemini.google.com, but a standalone app could enable iPhone users to access the latest design and features, similar to the Android version.

Given that Google Assistant already exists as a separate app on iOS, it makes sense for Google to take a similar approach with Gemini AI.

If testing proves successful, the standalone Gemini app could bring advanced AI tools directly to iOS, providing iPhone users with a dynamic and personalized AI experience through features like Gemini Live.

This launch comes as Apple is gradually rolling out new AI features for the iPhone 16 series, as well as the iPhone 15 Pro and 15 Pro Max models from last year—a process expected to continue into Spring 2025.