- Pakistan’s plan to allocate 2,000 megawatts of surplus electricity for BTC mining and AI data centers has raised “red flags” from the IMF.

- The IMF’s concerns are coming from possible energy shortages, lack of prior consultation, and other issues related to Pakistan’s fragile economy.

- Pakistan’s national strategy includes establishing a national Bitcoin reserve, a government-backed Bitcoin wallet, and the Pakistan Digital Asset Authority (PDAA).

Pakistan’s recent move to allocate 2,000 megawatts of surplus electricity for BTC mining and artificial intelligence data centers has drawn major concern from the International Monetary Fund (IMF).

Some of this tension is stemming from Pakistan’s ongoing negotiations with the fund over a very important financial aid package.

While the plan is intended to set Pakistan up as a digital asset hub, the IMF sees it as a possible risk, both economically and politically. Here are the motivations behind Pakistan’s recent decision, the IMF’s response, and what this clash means for the future of Bitcoin adoption within the country.

Pakistan’s Initial Announcement

In May, Pakistan announced a national strategy, aimed at embracing blockchain and AI technologies. This plan revolved around the country allocating 2,000 megawatts of surplus electricity to Bitcoin mining farms and AI data centers.

This move was part of a bigger plan to build a national Bitcoin reserve, launch a government-backed Bitcoin wallet, and establish a new regulatory body called the Pakistan Digital Asset Authority (PDAA).

The Finance Ministry approved the plan; meanwhile, the PDAA will be responsible for overseeing crypto exchanges, stablecoins, DeFi platforms, and the tokenization of national assets.

It was also expected to do all of this within the international guidelines laid out by the Financial Action Task Force (FATF).

The announcement was timed alongside Pakistan’s debut at the Bitcoin Vegas 2025 conference, where Bilal bin Saqib, crypto adviser to Prime Minister Shehbaz Sharif, announced the plans to the rest of the world.

The IMF Responds To The BTC Mining Plan

Just days after this announcement, the IMF raised red flags it spotted in the plan, and requested urgent clarification from Pakistan’s Finance Ministry.

A separate session has now been scheduled between the IMF and Pakistani officials to discuss the electricity allocation for mining and data centers.

So far, the IMF has planned out its concerns from several points, including energy shortages, lack of legal consultation, legal ambiguity, and the economic implications of this plan.

The IMF’s concerns over energy shortages stem from how Pakistan has long struggled with energy deficits. The fund alleges that Pakistan allocating such a large portion of electricity to a non-essential sector like crypto mining could worsen power outages for ordinary citizens.

Moreover, the IMF states that it was not informed ahead of the announcement, which will likely complicate ongoing discussions for the 2025–2026 budget.

In addition, for such a massive plan, the status of cryptocurrencies in Pakistan remains unclear, and the IMF has questioned whether such a policy is even lawful under current laws.

Finally, the fund is worried about the fiscal impact of this plan on the nation’s economy, especially how it could affect power tariffs, foreign exchange reserves, and resource allocation. The timing of the plan itself couldn’t be more sensitive.

Pakistan just received a $1.02 billion tranche from the IMF’s $7 billion Extended Fund Facility. It also has $22 billion in external debt maturities due next year, with foreign exchange reserves hanging dangerously low.

The IMF Versus Bitcoin

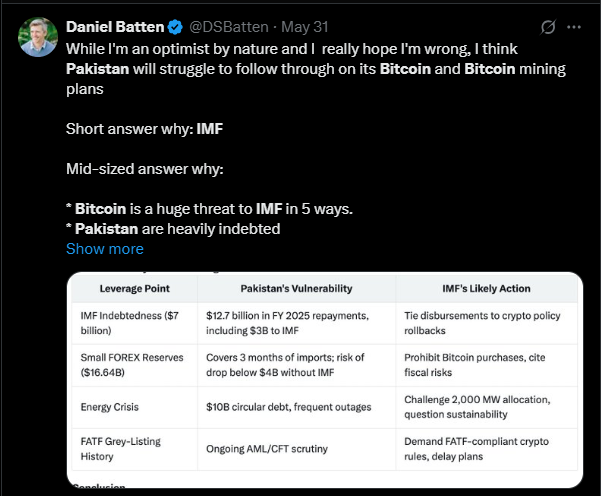

According to insights from New Zealand–based climate tech investor Daniel Batten, Pakistan’s plan is unlikely to survive IMF scrutiny. Batten says that the IMF sees Bitcoin as a direct threat for five reasons, including its reduced remittance costs, loss of seigniorage and its decreased multilateral lending.

Batten notes that the IMF has already discouraged or derailed crypto initiatives in three other countries, including El Salvador, the Central African Republic, and Argentina.

He expects a similar outcome in Pakistan, where the country’s economic fragility gives the IMF strong leverage.

Overall, the question remains: Will Pakistan stand firm or fold under pressure? The outcome of the ongoing battle could influence how other countries approach crypto adoption in the years to come.