Micron Technology is poised to make waves this week with its fiscal second-quarter results scheduled for release after the bell on Wednesday. As anticipation swirls around the potential of artificial intelligence (AI), semiconductor giants like Micron are drawing increased attention.

Monday saw Nvidia, a key player in AI technology, continue its 2024 rally as it kicked off its GTC Conference. This positive momentum also buoyed other AI-related stocks such as Alphabet and Super Micro Computer.

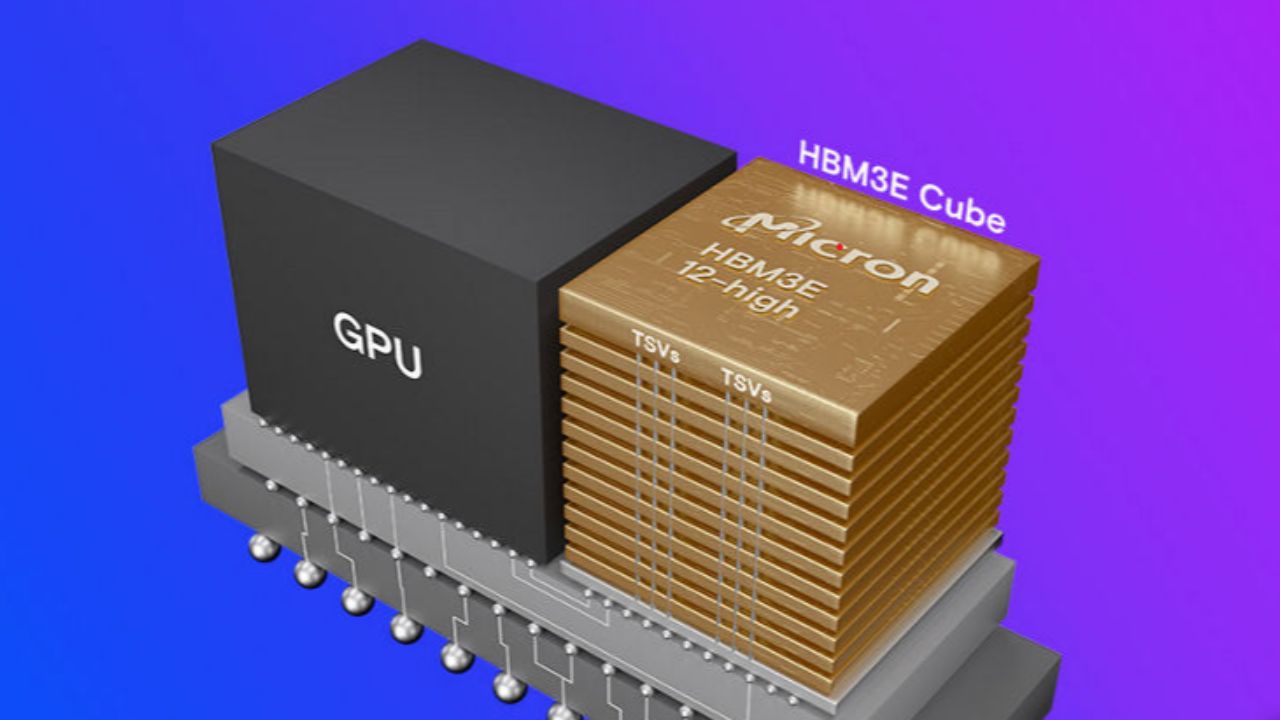

Analysts are watching Micron’s upcoming report as a possible trigger for better future earnings. This optimism is largely based on the higher sales of Micron’s High Bandwidth Memory 3E (HBM3E) chip to Nvidia, which is important for AI applications.

Micron has emphasized the importance of HBM3E for advancing AI technology, a view shared by analysts.

Analyst Hans Mosesmann from Rosenblatt is confident about Micron’s future in the current memory cycle and expects significant growth ahead. Mosesmann’s bullish outlook includes a buy rating and a $140 per share price target, implying a significant upside potential.

Analyst Krish Sankar from TD Cowen pointed out the increasing market share of Micron’s HBM3E and predicted a significant rise in the next year. Sankar maintains an outperform rating on Micron stock, with a $120 per share price target, indicating a notable upside.

Despite Micron’s shares already gaining 12% this year, analysts see room for further growth, especially in comparison to industry peers like Nvidia.

Analyst Mehdi Hosseini from Susquehanna thinks that Micron’s upcoming guidance could boost investor confidence and believes the stock is currently undervalued.

Citi analyst Christopher Danely raised his price target significantly, citing Micron’s expanding exposure to AI and the precedent set by similar stocks like AVGO and AMD.

Danely’s optimism is fueled by expectations of Micron exceeding consensus estimates and providing strong guidance for the next quarter, driven by robust DRAM pricing and shipments of higher-margin HBM.

Experts expect Micron Technology’s upcoming earnings report to be important, as analysts are optimistic about the company’s future due to its important role in AI technology and its success in selling computer memory.