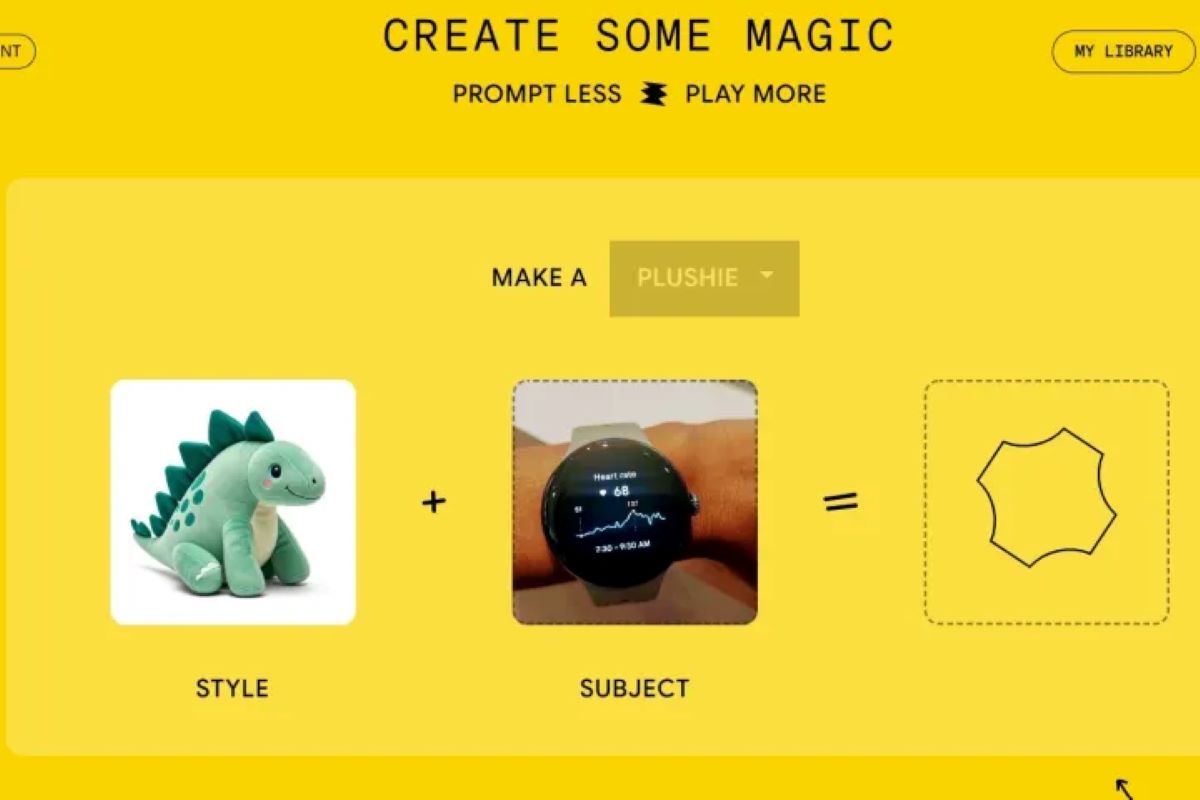

Google’s latest artificial intelligence tool, “Whisk,” enables users to upload photos and receive a combined, AI-generated image without needing to input any text to specify their preferences.

By uploading images that represent the subjects, settings, and styles they have in mind, users allow Whisk to merge these elements into a single cohesive image.

In a blog post, Google described Whisk as a “creative tool” designed for rapid inspiration rather than a “traditional image editor.” Whisk is positioned as an entertaining feature for sparking creativity rather than a solution for creating polished professional artwork.

The introduction of Whisk highlights the ongoing race among major tech companies like Google and OpenAI to develop consumer-oriented products that showcase the potential of cutting-edge technology. However, critics caution that the fast-paced development of AI without proper safeguards could pose significant risks to society.

Since OpenAI launched its text-to-image generation tool, DALL-E, in 2021, AI-generated artwork has proliferated on social media and become a prominent feature in consumer products. Building on the popularity of text-to-image tools, Google’s Whisk functions as an image-to-image generator.

Users of Whisk can “remix” their final image by modifying the input parameters and experimenting with categories to create variations such as plush toys, enamel pins, or stickers. While users can add text to guide specific details, text input is not required to generate images.

“Whisk is designed to allow users to remix a subject, scene, and style in new and creative ways, offering rapid visual exploration instead of pixel-perfect edits,” explained Thomas Iljic, Director of Product Management at Google Labs, in a statement.

Whisk leverages the generative AI technology developed by DeepMind, the AI research lab Google acquired in 2014. The tool integrates Google’s core AI platform, Gemini, launched in December 2023, with Imagen 3, DeepMind’s latest text-to-image generator released the same month.

When users upload images to Whisk, Gemini generates a caption based on the uploaded content, which is then processed by Imagen 3. This method captures the “essence” of the subject rather than creating an exact replica, facilitating creative remixing but also introducing the possibility of deviations from the original input.

For instance, the resulting image might feature variations in height, hairstyle, or skin tone compared to the original input images, as Google noted in its blog post.

Whisk’s introduction follows earlier challenges faced by Google’s Gemini platform. When Gemini’s text-to-image creator was launched in February, it drew criticism for generating historically inaccurate images.

Currently, Whisk is available as a website on Google Labs for users in the United States and remains in its early development stages. The project, codenamed “Moohan,” reflects Google’s ongoing efforts to innovate in the AI space.

The competitive space includes OpenAI’s recent reveal of a text-to-video generator called Sora, which underscores the rivalry among tech giants to deliver groundbreaking consumer AI tools.

Dan Ives, Managing Director and Senior Equity Analyst at Wedbush Securities, described Whisk as another example of Google flexing its muscles in the AI and tech arena.

“DeepMind is a key asset for Google,” Ives stated, highlighting AI’s central role in Google’s 2025 product strategy, which also includes a new Android operating system developed in partnership with Samsung and Qualcomm.